This is a high-level, non-technical introduction to load balancers. A more technical version will follow shortly.

The cloud is a very interesting concept today in part because of its agility and scalability. This makes it possible to use as many or as few of a set of resources as you require when you need them and pay for just what you use thanks to the billing system employed in the cloud.

In this article, we will be looking at load balancers. As a cloud concept, load balancers are critical to the nimbleness and agility of the cloud that makes it so attractive. The other part of the coin is ‘Auto Scalers’. Watch out for my article on auto scalers in the cloud.

💡 Nugget: Keep these two main points in mind at all times when thinking about Load Balancers: 1.They distribute traffic 2. They ensure traffic is equally distributed amongst all available endpointsIn the most simple sense, the cloud operates as a distributed system and a load balancer is a distributor of sorts. ‘Of Sorts’ because a typical load balancer employs different types of mechanisms and protocols to do its job.

Its primary job is to ensure that:

- No one device is overloaded with traffic thereby creating a service outage.

- Traffic gets to its intended endpoint.

- High-volume traffic is moved as quickly and as efficiently as possible.

- Where required, the right intelligent decision is made based on identified protocol and or port numbers.

Load balancers use either IP or HTTP(s) Protocol to facilitate their function.

Types of Load Balancers: Network Load Balancers Application Load Balancers

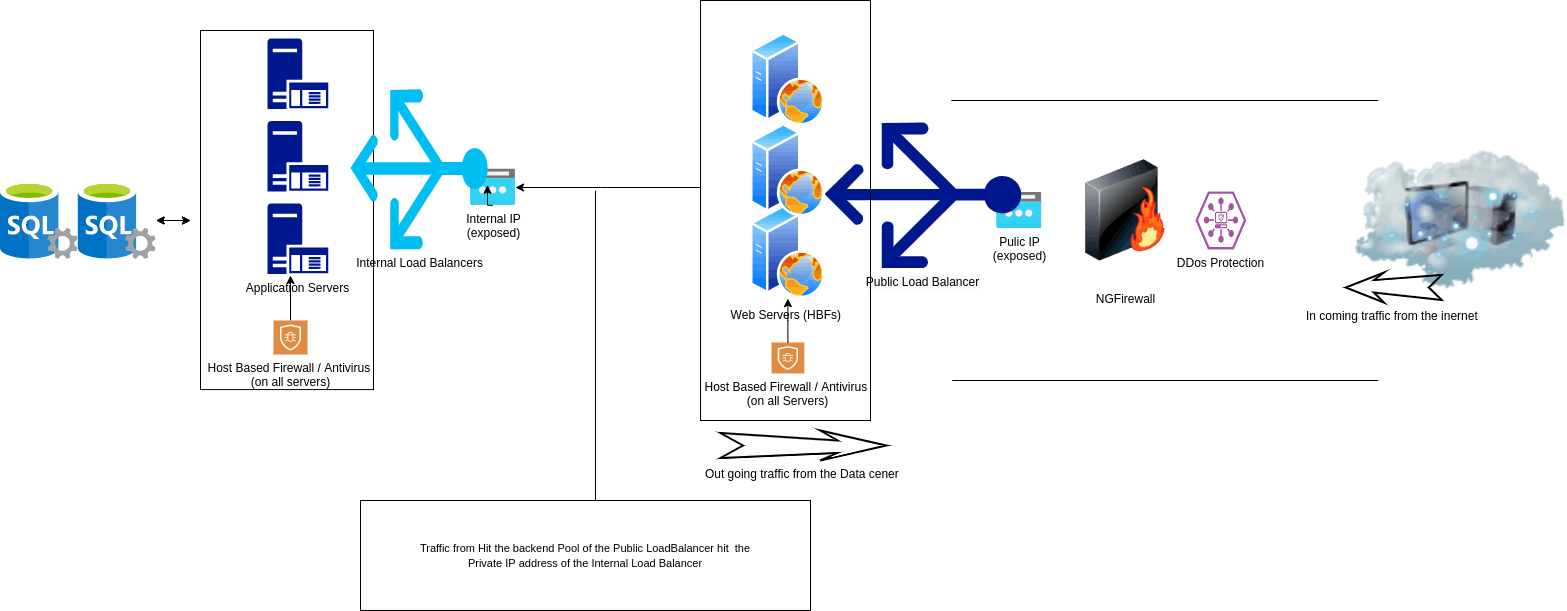

Network Load Balancers Also called (NLBs), focus on network traffic and are usually placed at a central point or network edge where traffic comes into the network. They can also be placed midstream within the network to facilitate efficient forwarding of high-volume traffic.

- A network load balancer works at Layer 3 of the OSI so it sees the IP protocol or Internet Protocol thus it uses IP for its primary decision-making.

- Network load balancers distribute massive amounts of traffic in the quickest, most efficient way possible

- Traffic is sent to other networks, subnets routers or internal load balancers as the case may be, and not to actual end devices (servers, VMs, etc).

- The focus here is ‘speed’ in order to avoid congestion

- There are physical and virtual load balancers depending on where your infrastructure resides (on-prem or cloud)

- Virtual load balancers are usually software (virtualized) versions of the physical on-prem load balancers from companies like F5.

- Most Cloud Solution Providers (CSPs) have also developed their versions of cloud-native load balancers specific to their platform

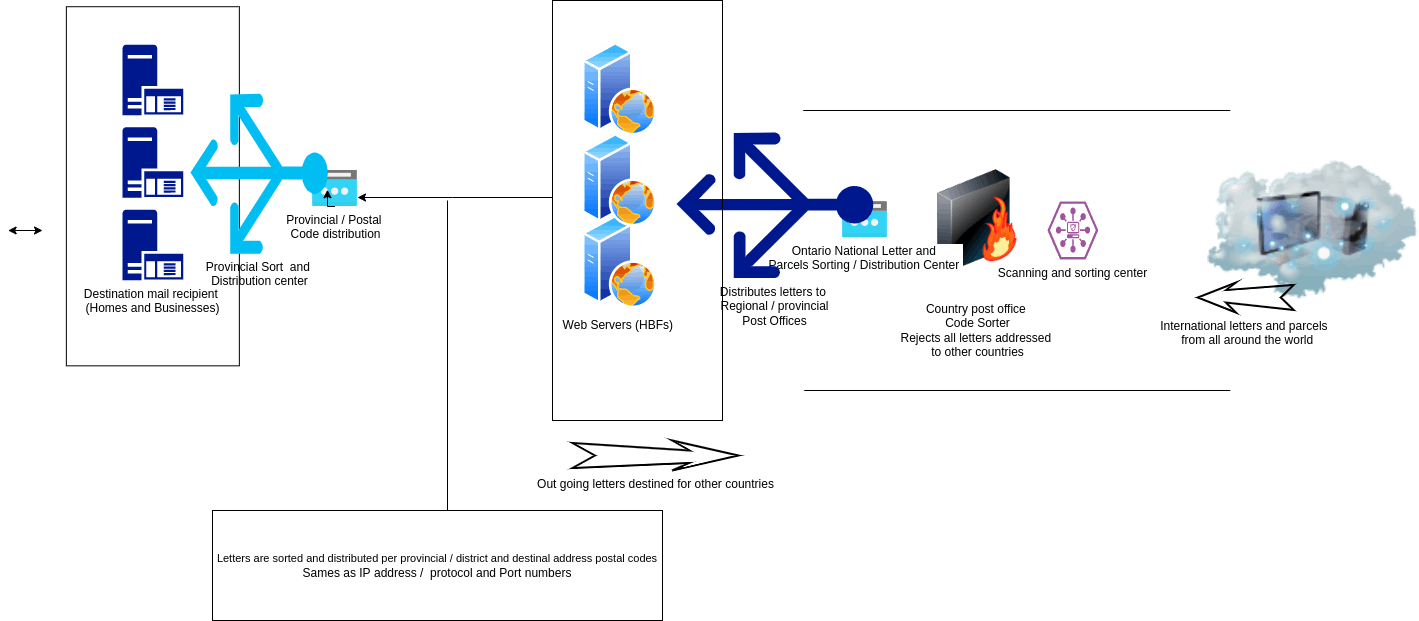

How Load Balancers Work: To help us understand how load balancers work, we will use the analogy of the post office /postman.

Letters come into a central post office from all over the world. Now if the central post office tries to sort each letter by its specific address, it will take ages to deliver a single letter. In fact, it will be an almost impossible task given the volume of letters that keeps arriving by the second.

So just like Network load balancers, the central Post office has to sort by region codes and quickly move the letters in the direction of the respective regions. I am in Canada so I will use Canadian regions.

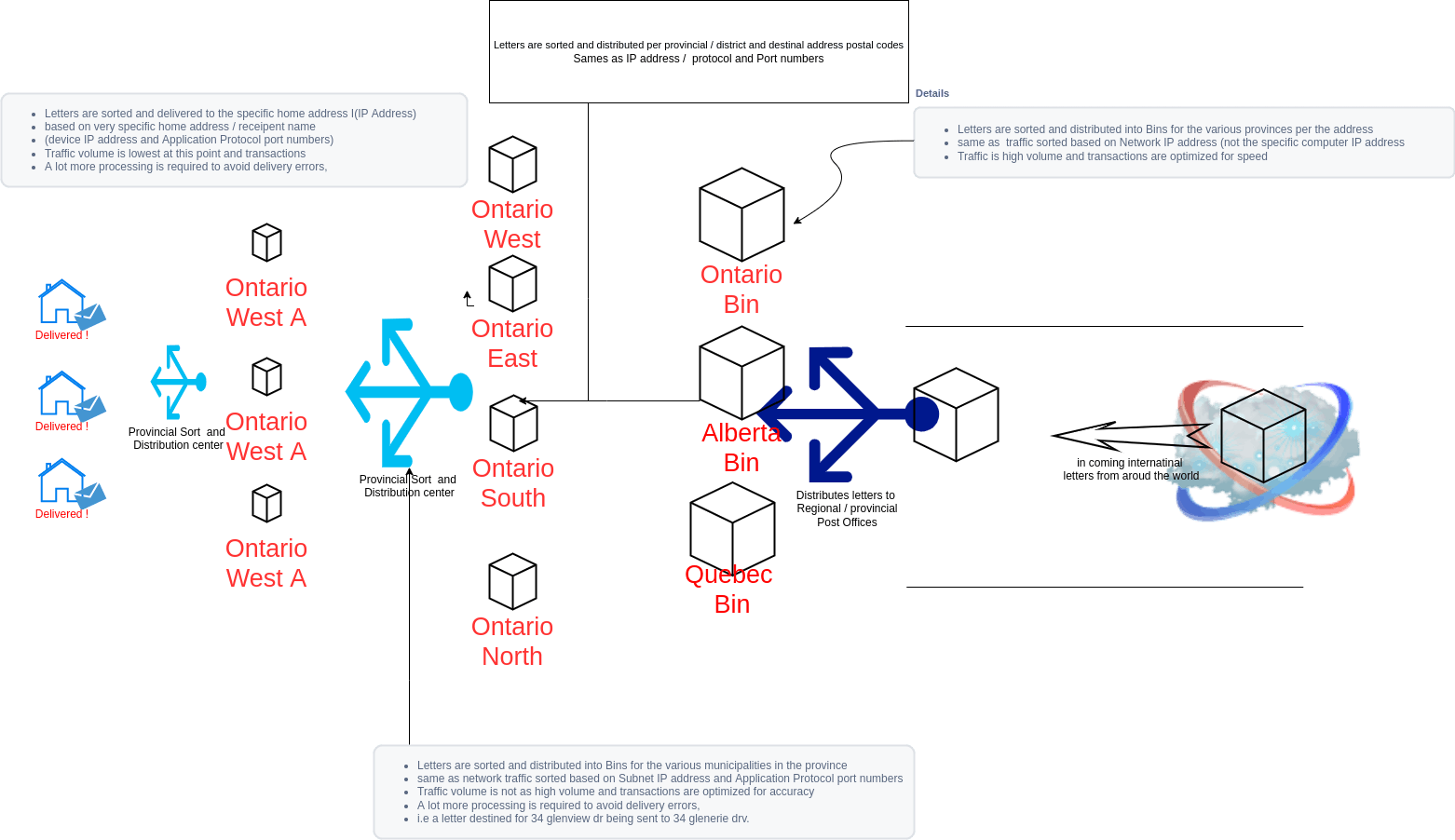

All letters with ON (Ontario) on the address get dumped into the Ontario Bin. same for British Columbia, Alberta, etc.

The central sorter does not care which municipality a specific letter is bound for in the region, it just needs to get the letter headed in the right region That's how NLBs work. It knows all the network subnets or Virtual LANs in the main network by IP address segmentation.

Traffic arrives at the device acting as the NLB, it reads the IP header and moves the packet in the direction of the subnet or VLAN as the case may be.

Note that on that same packet, there are other headers that it could have read (like the TCP header, The Segment, The Presentation Header etc) all these contain valid information but they would slow down the NLB’s primary job of quickly moving data in the direction of their destination just like the central post office. The focus is to move the data out as soon as it comes into the load balancer

Now to the second function of NLBs. Imagine that there are 20 bins (numbered 1 through 20) available for incoming letters destined for the 20 municipalities in Ontario to be deposited into. Now, the system is designed such that all bins should get approximately the same number of letters deposited into them per time.

A pickup truck picks each bin in turn and moves the content to the municipal post office for further processing. We can see that the massive volume of letters (traffic) arriving from all over the world at the central post office has now been quickly and effectively moved down the line towards the different municipal post office.

But what if the central sorter drops all Ontario-bound letters into Bin 1 while ignoring Bins 2 - 20. before long Bin 1 will fill up and overflow.

Also, what if the central sorter misreads or ignores the code for municipality A and dumps municipality A’s letter into Municipal B’s bin?

Also, No no one is designated to pick up the overflow into the other bins This is what the situation will be if there are no load balancers or if the load balancer is misconfigured. Unsatisfied customers all the way!

This is the result of those what ifs! The letters are lost. (service outage due to massive packet loss). You will get angry citizens who don't get their letters or in cloud terms, angry customers who don’t get their Amazon orders, or corporate deals that go south because of data miswrites. The ability to get data packets to its destined endpoint on time and into a safe manner is one of the most critical processes in the cloud.

What the NLB does is to ensure that all Bins (down stream LBs and or end point Virtual Machines) 1- 20 get equal or as close to an equal volume of letters dumped into them at any given time.

It also ensures that the right bin gets the letters with the right municipal codes in them. In cloud terms, the right packets are delivered to the right VLANs through which they get to the right Virtual Machines to do the processing.

As such no mail is lost due to overflow and not angry citizens due to lost mail. In compute terms. No packet is due to buffer overflow at the receiving virtual machine (Vms) and no service outage due to overwhelmed VMs which also results in happy clients for your website or application on your phone web-store or in-office app as the case may be

Application Load Balancers (ALB) Note: ALB just like NLBs also:

- Distribute traffic

- Ensure traffic is equally distributed amongst all available parties with the added responsibility of ensuring that the “Right Application” gets the right data

- This means more processing (which take more time and CPU)

- Usually sit behind Network Load Balancers and get their traffic from NLBs

- Supports TCP and UDP Protocols

An Application Load Balancer (to continue our analogy) is the downstream post office or the provincial and community post office. They are closer to the mail recipients and use a combination of the postal code, and home address instead of just the regional code to direct the mail.

While the central post office uses the regional code to get the mail to the region, the municipal and community Post office uses both the Postal code and the home address to get the mail into the hands of the end-user.

This same as Application Load Balancers looking into the presentation header to see which application needs to use this specific traffic. Because they need to find determine if this is meant for MS Office Word, a messaging app, or a video rendering app.

Side Note: While applcation load balancers can be effectively used to distribute data inconjuction with the network load balancer in an N-Tier architecture, they are more commonly used in Microservices architectures due to the fact that the volume of data that runs through a typical N-Tier is much more larger than in a Microservices architecture. Another reason is that there is a higher requirement for intelligent decision making when routing data in a Microservice architecture and application load balancers are better suited for this.

- ALBs are much slower than NLBs

- They are also much more intelligent

- They make decisions on the fly that must be correct

- They also use a lot more power and processing

- They are closer to the destination application that will utilize the data.

Just Like any Load Balancer, ALBs must also ensure they distribute data equally amongst all available parties. i.e equal volume into Bins 1- 20 and not just Bin 1.

Why Do We Use Load Balancers?

- To distribute Data

- To Prevent Service Outage due to overwhelmed systems

- To Improve System Performance

- To guarantee Business Continuity in the event of unexpected failure, traffic spikes due to external events like Festive Holidays

Configuring Load Balancers

For this introductory article, I would only mention how load balancers can be used in the cloud.

- You can configure a load Balancer from the console or portal depending on the CSP you are using; AWS or Azure

- You also can achieve the same using the version of cloud shell available to you within your CSP

- My preffered method to configure and manage load balancers at scale is via the infrastructure as code IaC tool like Terraform.*

Next Steps This is the first in a series that aims to introduce the concept of load balancers. Next, we will dive deep into load balancer fucntionalities and configuration across various platforms including but not limited to AWS, Azure, on-prem with third party solutions.